Case Study: Grading Assistant

As the LLM revolution started reverberating across the world, NoRedInk was in an interesting position. It had no prior experience with AI or machine learning, but its whole reason for being, teaching English Language Arts, was exactly what the early models seemed best able to support. A huge opportunity to advance our mission had arrived out of the blue, but it wasn't yet clear what that opportunity would look like.

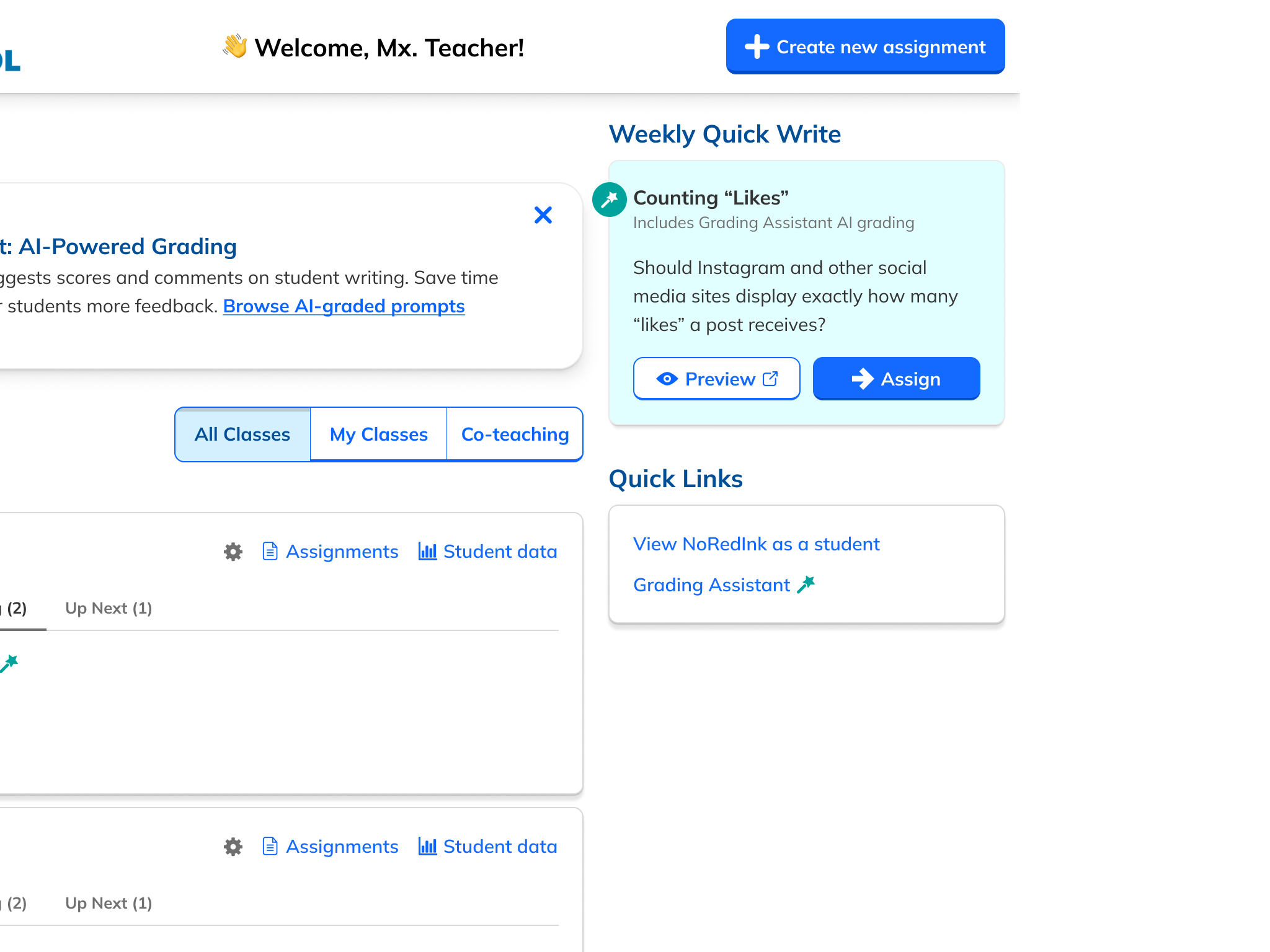

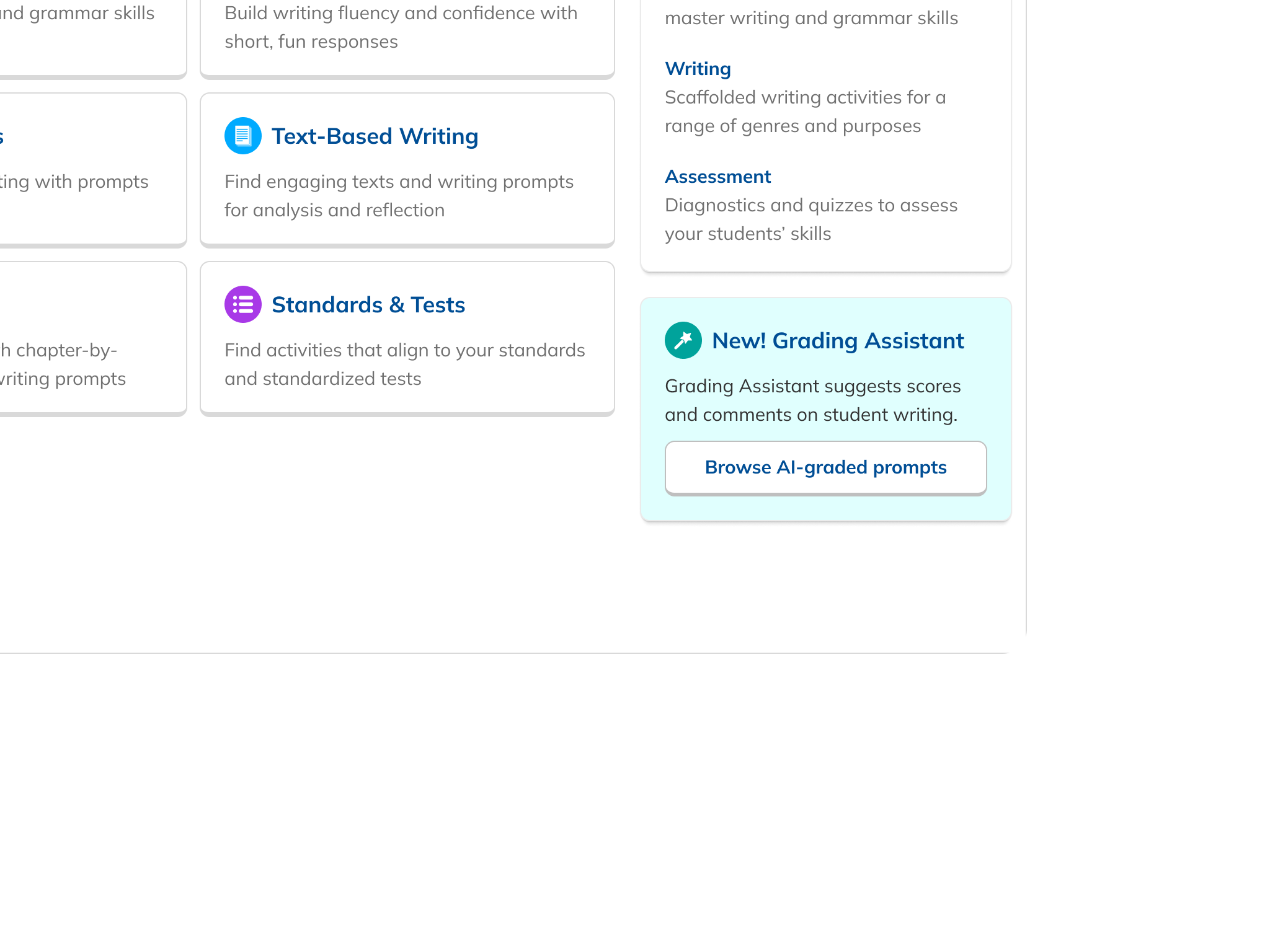

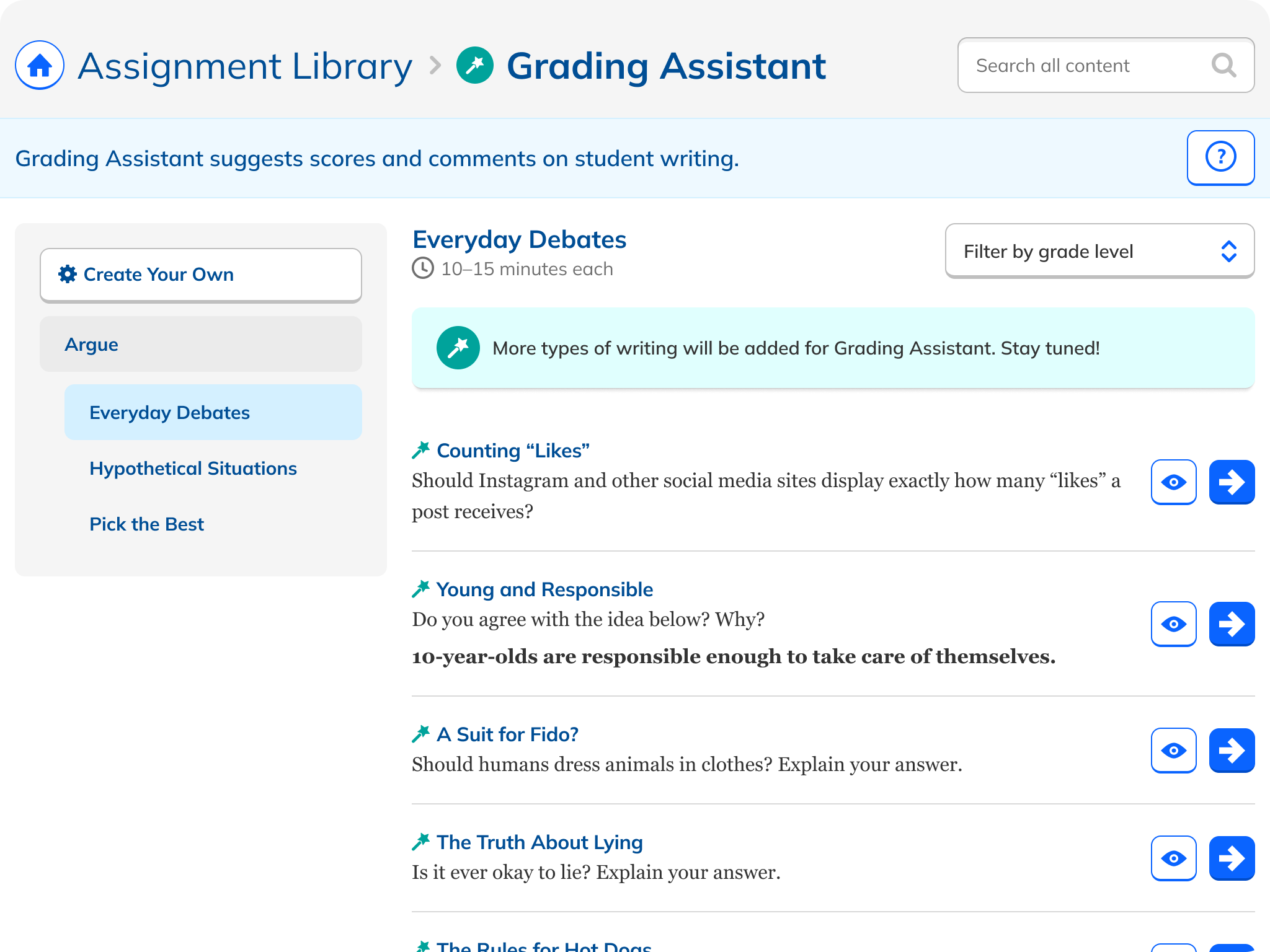

What was clear: companies were employing "spray-on AI" to ride the early wave, bolting on LLM-powered features that were more about checking a box than meaningfully improving their products. NoRedInk wanted to take a more thoughtful, longterm approach. After early ideation, we arrived at automatic grading. Certainly not a new concept in the industry, but one we felt LLMs were uniquely good at, and a feature that would meaningfully serve NoRedInk's teachers and students in a fundamental way.